Why use FPGAs for Compute Acceleration?

FPGAs offer high performance, workload flexibility and energy-efficient operation for a range of HPC applications.

CORE FPGA Benefits for HPC

The FPGA value proposition for HPC has strengthened significantly in recent years.

These are key advantages emerge as demonstrated in our BWNN white paper:

Performance Acceleration

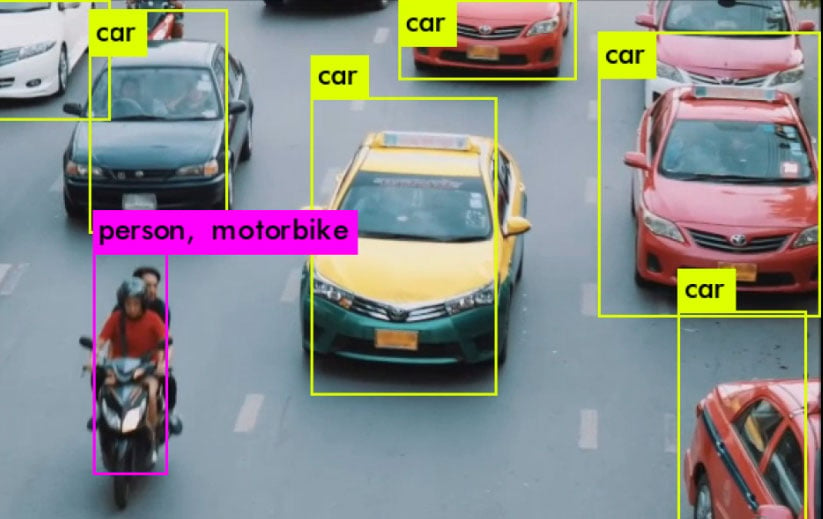

Working alongside CPUs, FPGAs provide part of a heterogeneous approach to computing. For certain workloads, FPGAs provide significant speedup versus CPU—in this case 50x faster for machine learning inference.

Flexibility in Application Tailoring

FPGAs have a range of tools to best tailor to the application. The hardware fabric adapts to use only what’s needed, including hardened floating-point blocks when required. For BWNN’s weights, we used only a single bit, plus mean scaling factor, and still achieved acceptable accuracy but saving significant resources.

Energy Efficiency

Power per watt is not only important at the edge, it’s in the power budget of datacenters in both space and cost of power. FPGAs can uniquely deliver the latest efficient libraries yet at far lower power per watt than CPUs.

Software-based Development

With BittWare’s exclusive optimized OpenCL BSP, you’re able to both tap into software-orientated developers and the latest software libraries. This allowed us to quickly adapt the YOLOv3 framework, which has improved performance over older ML libraries.

Dive Deeper into HPC

Read the 2D FFT White Paper

Read the 2D FFT White Paper

Click Here

Read the BWNN White Paper

Read the BWNN White Paper

Click HereApplications

We target applications when demand to process storage outpaces traditional architectures featuring CPUs.

Artificial Intelligence/Machine Learning Inference

Scientific Simulations

Image/Video Processing

Particle Physics Modeling

Gene Sequencing

Molecular Dynamics

When to Use FPGAs for HPC

FPGAs allow customers to create application-specific hardware implementations that exhibit the following properties:

- Highly parallel implementations

- Memory access patterns that are not cache friendly

- Data types that are not natively supported in CPUs or GPUs

- Low latency or deterministic operation

- A need to interface to external I/O

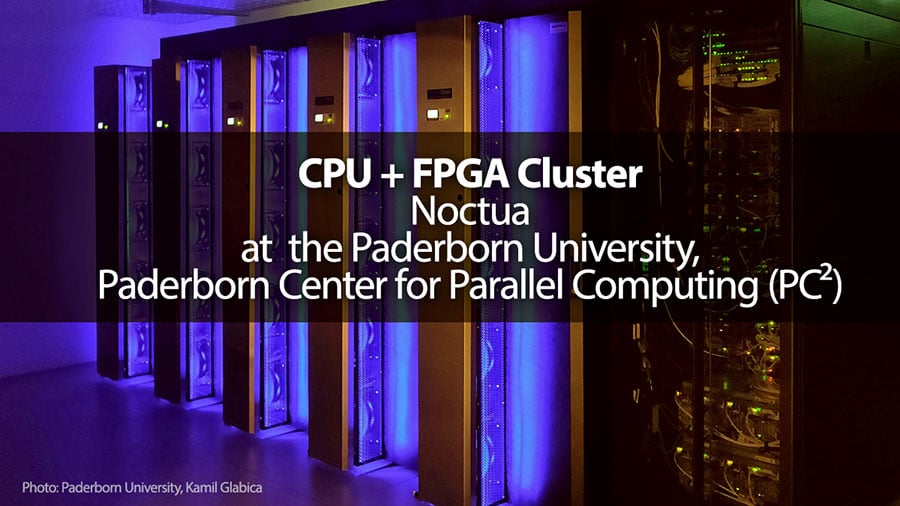

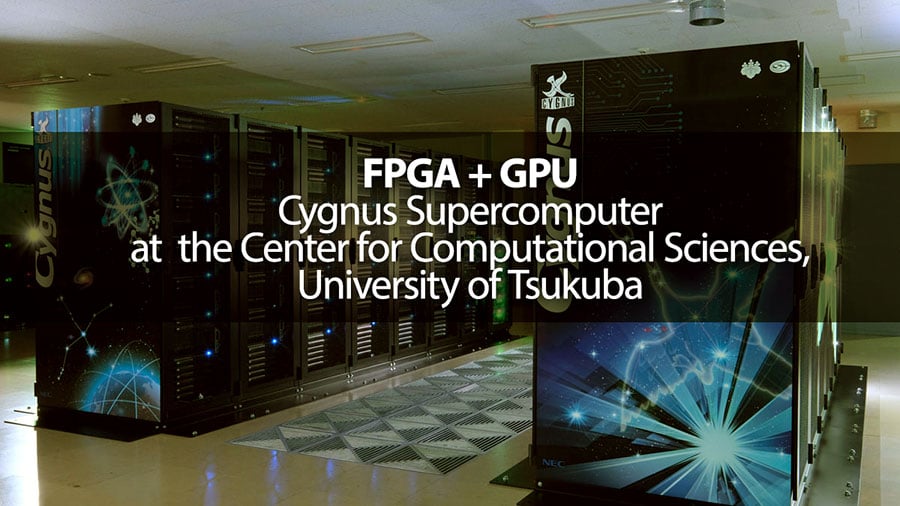

Two Customers Using BittWare Cards in HPC

Browse Our Compute Acceleration Products

Purchase Your Cards Pre-integrated with TeraBox

Got a Question?

Get answers to your HPC questions from our technical staff.

"*" indicates required fields